|

Posted on April 13, 2020

by Taran Lynn

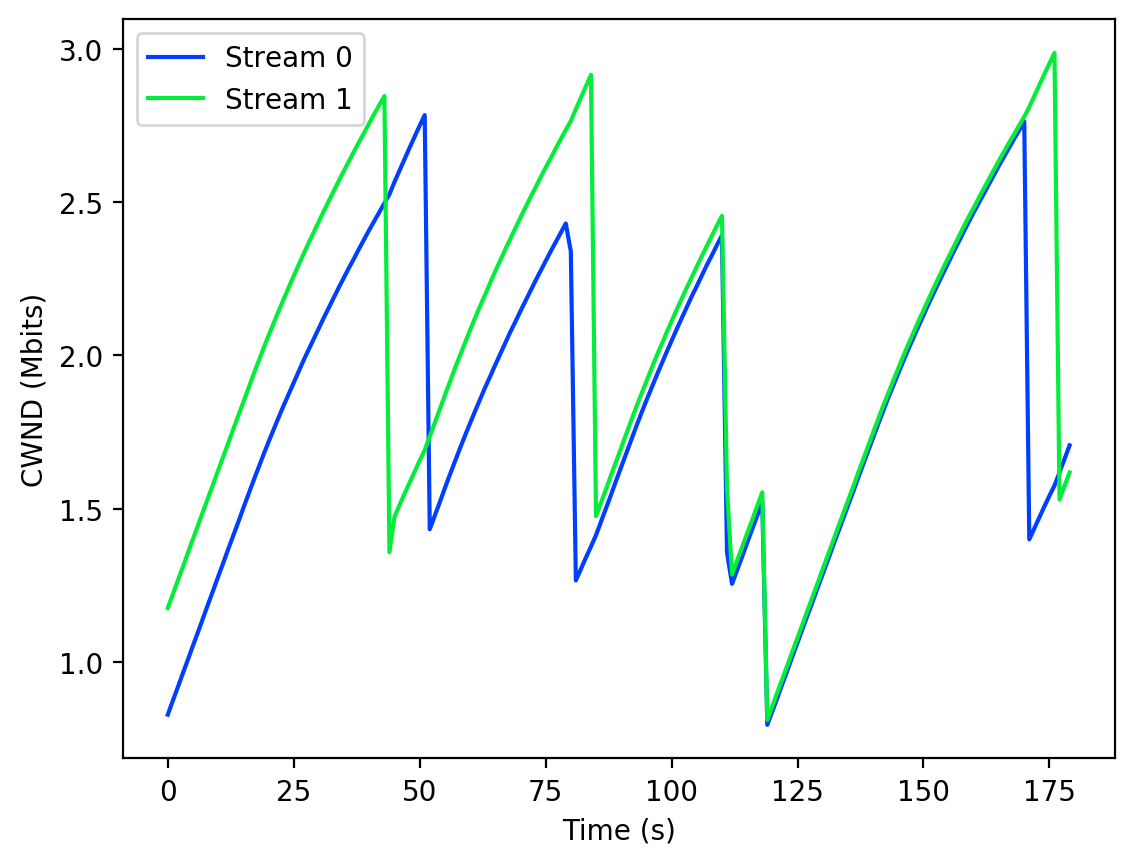

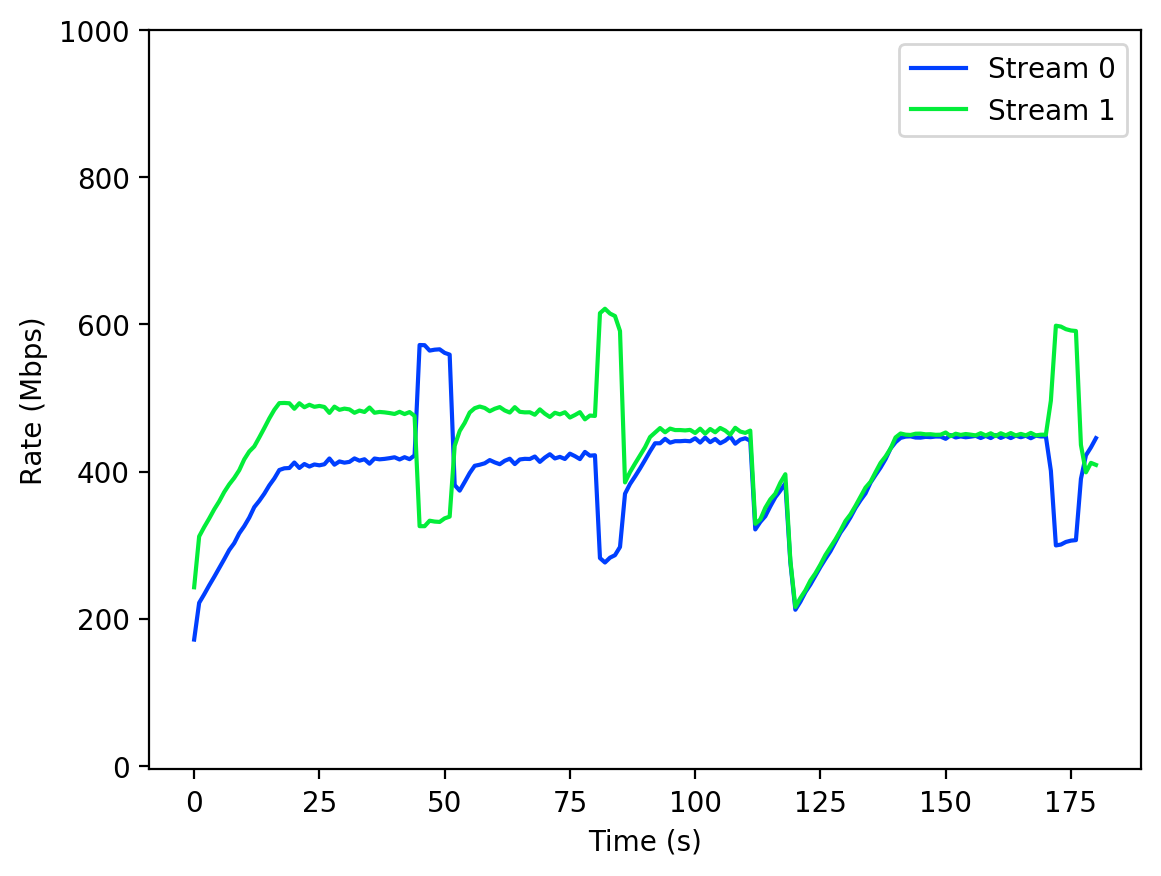

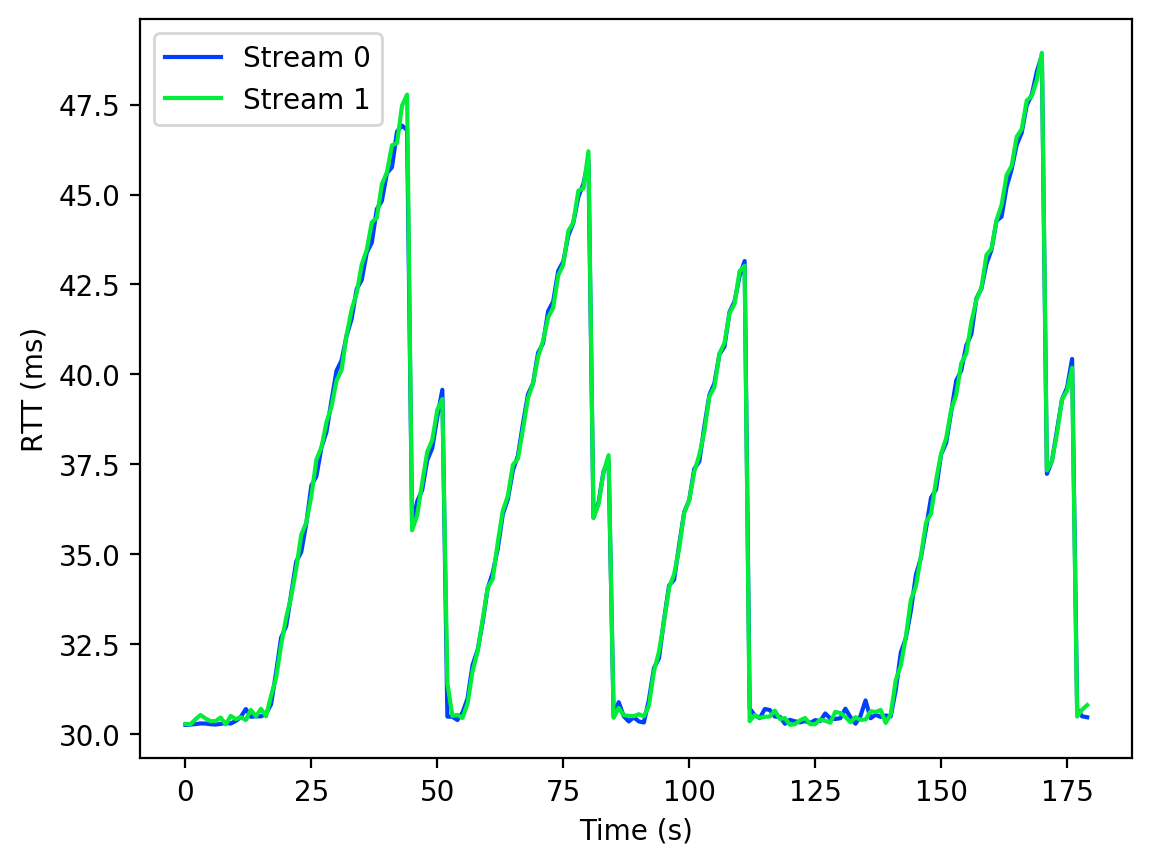

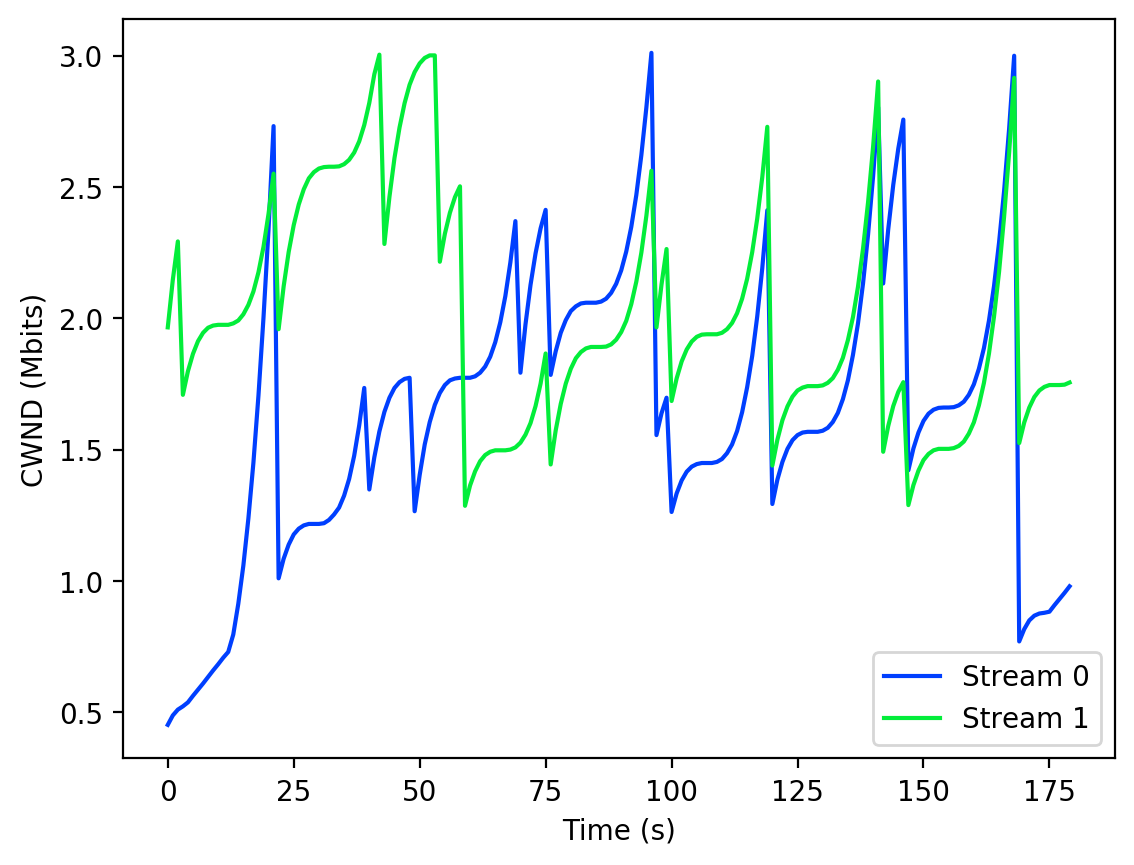

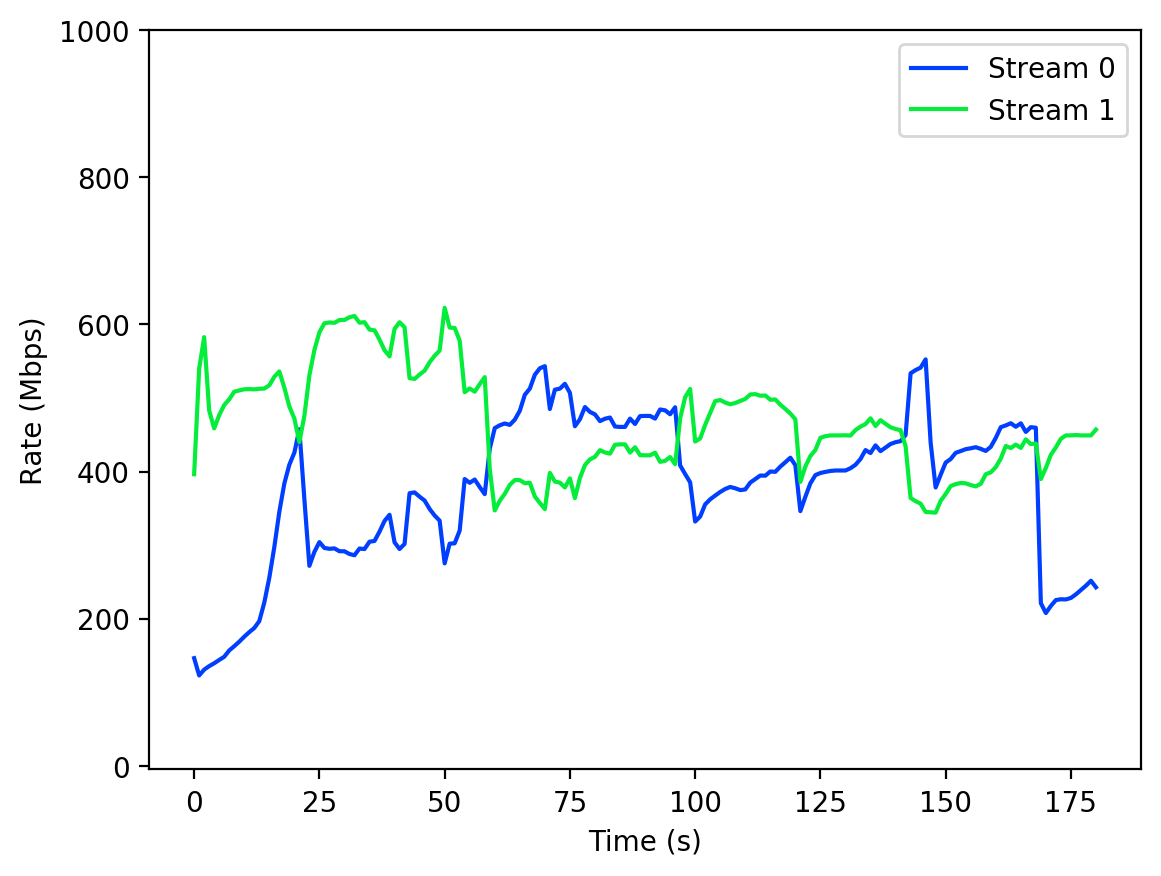

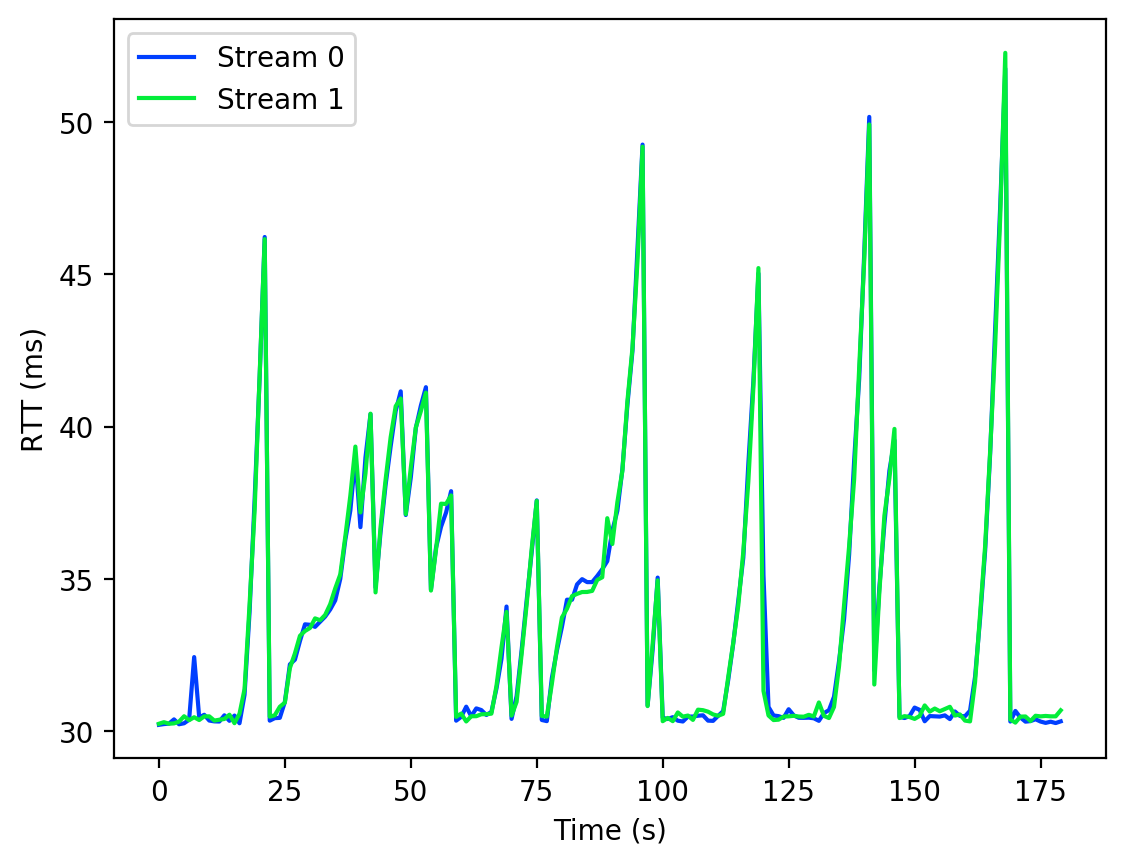

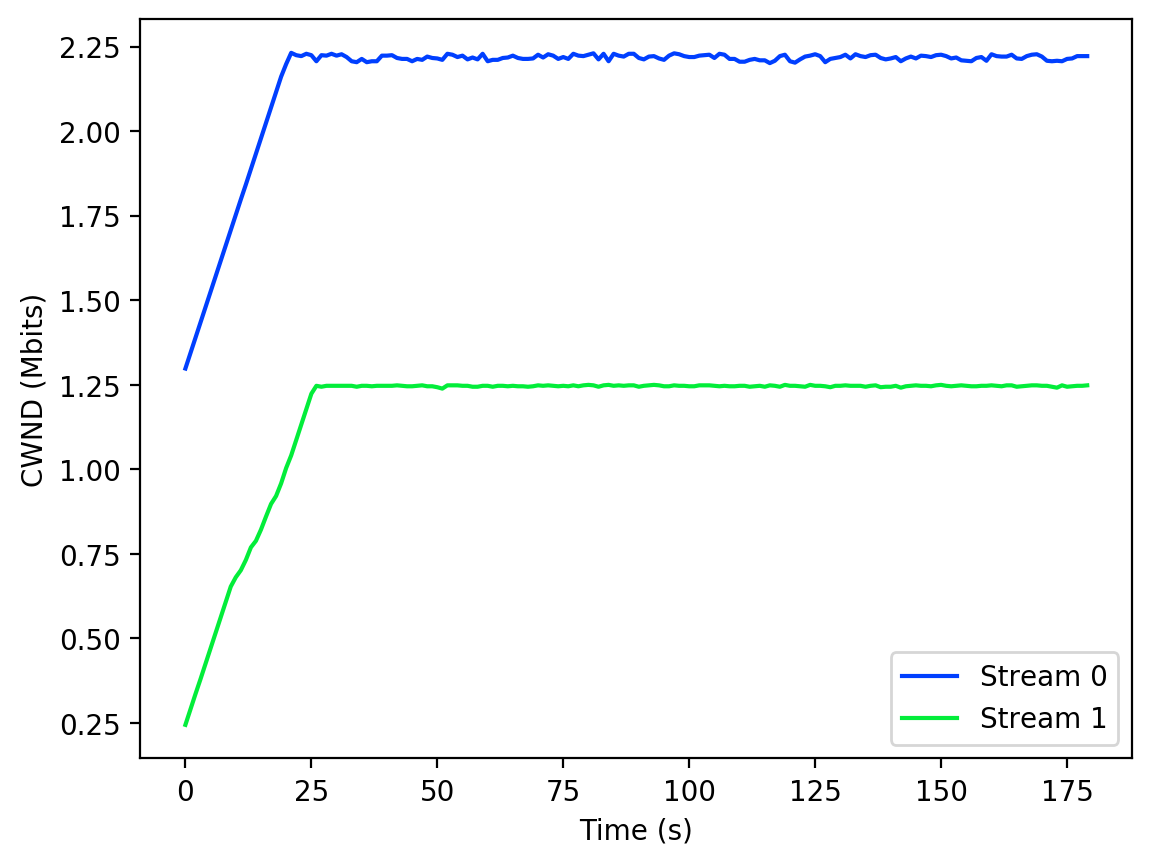

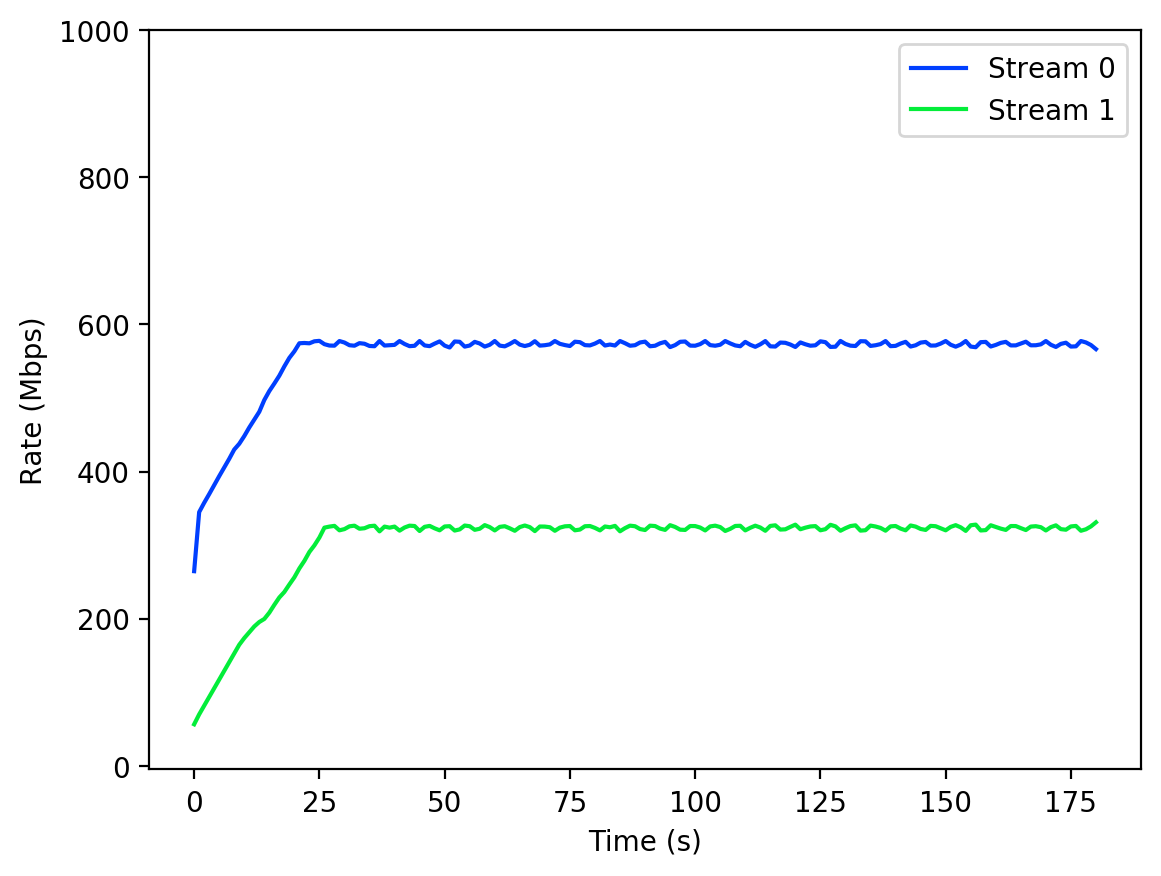

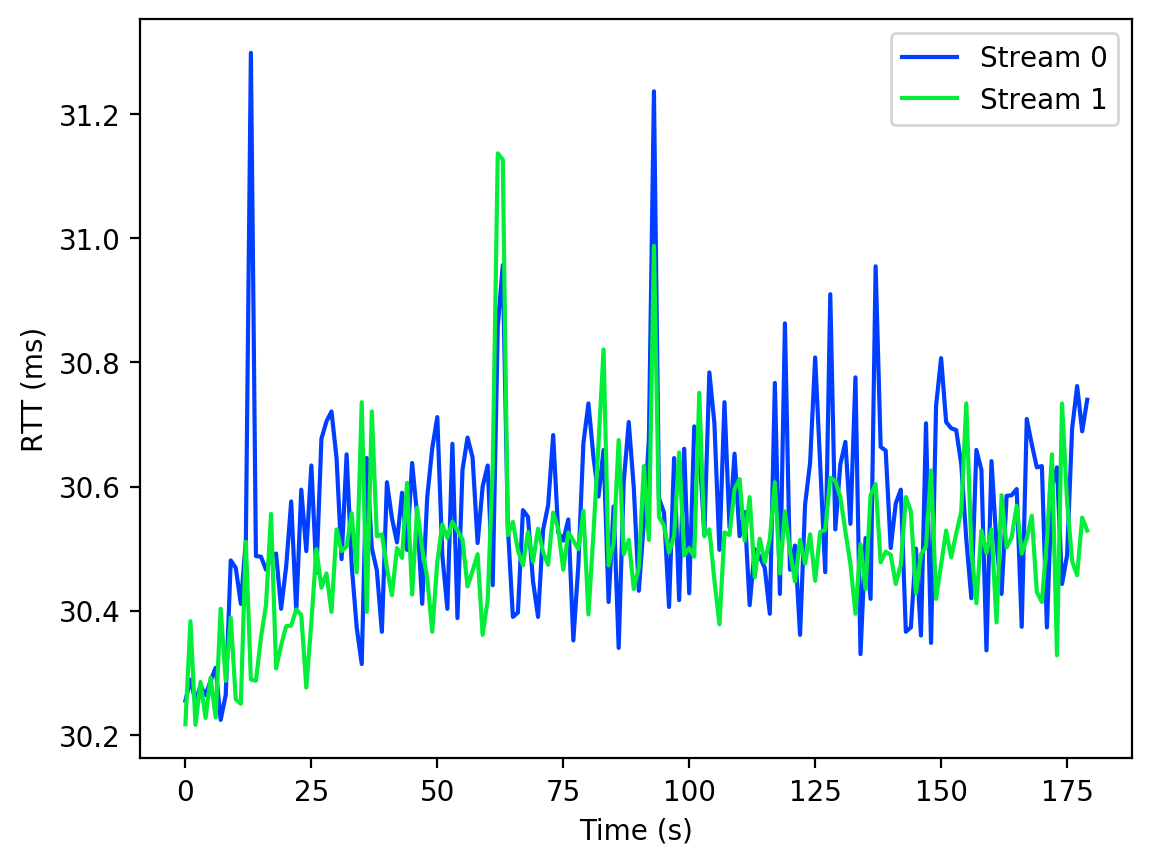

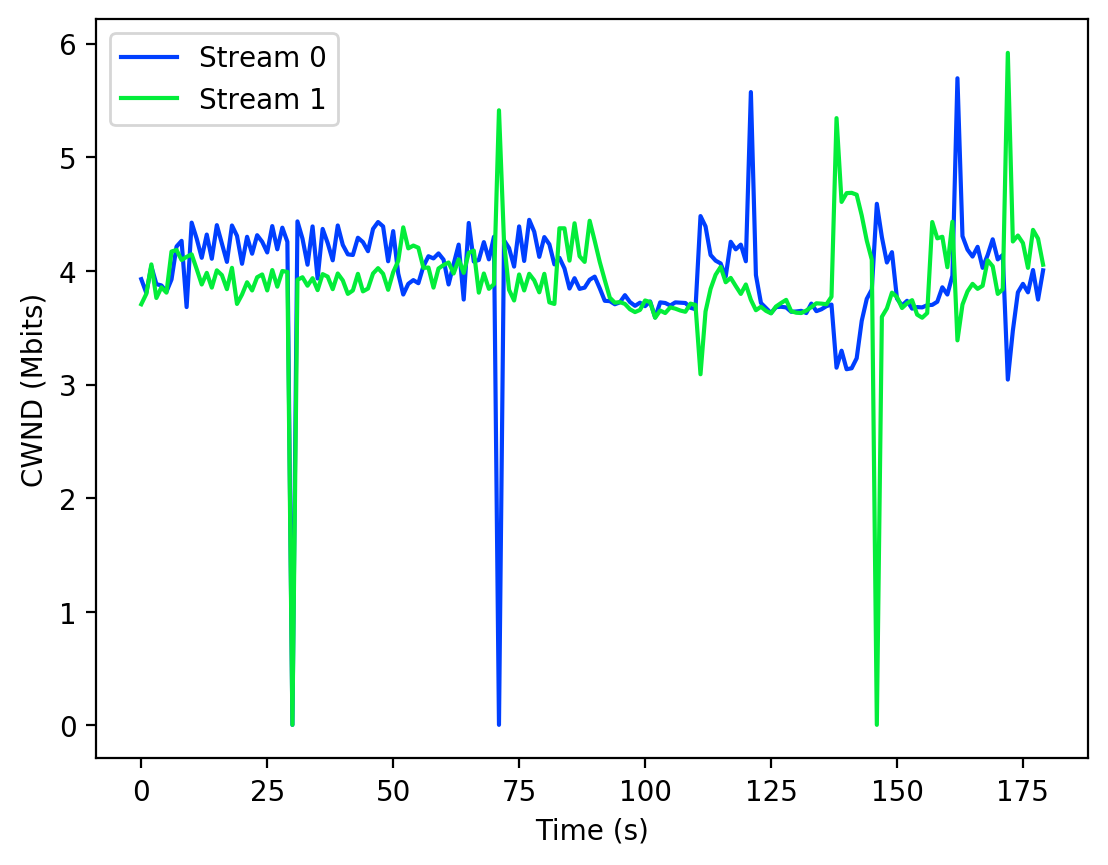

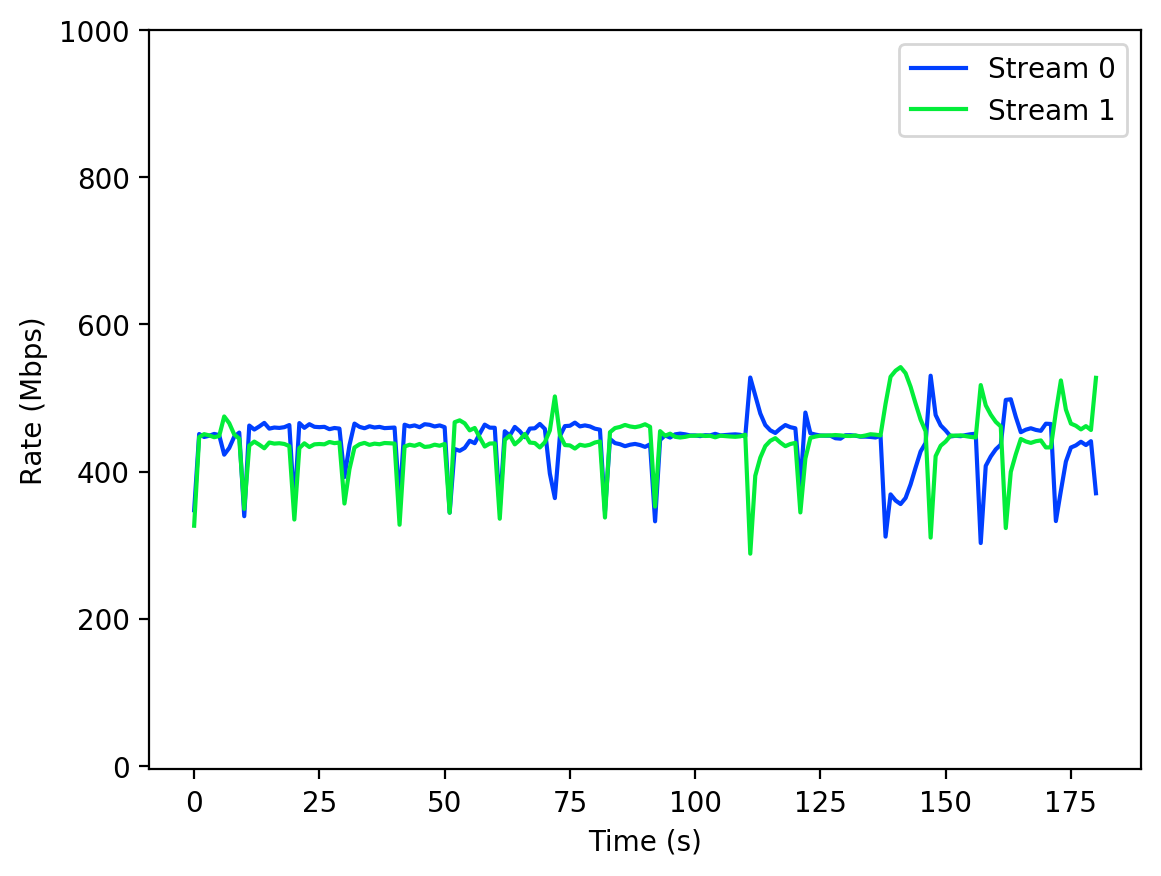

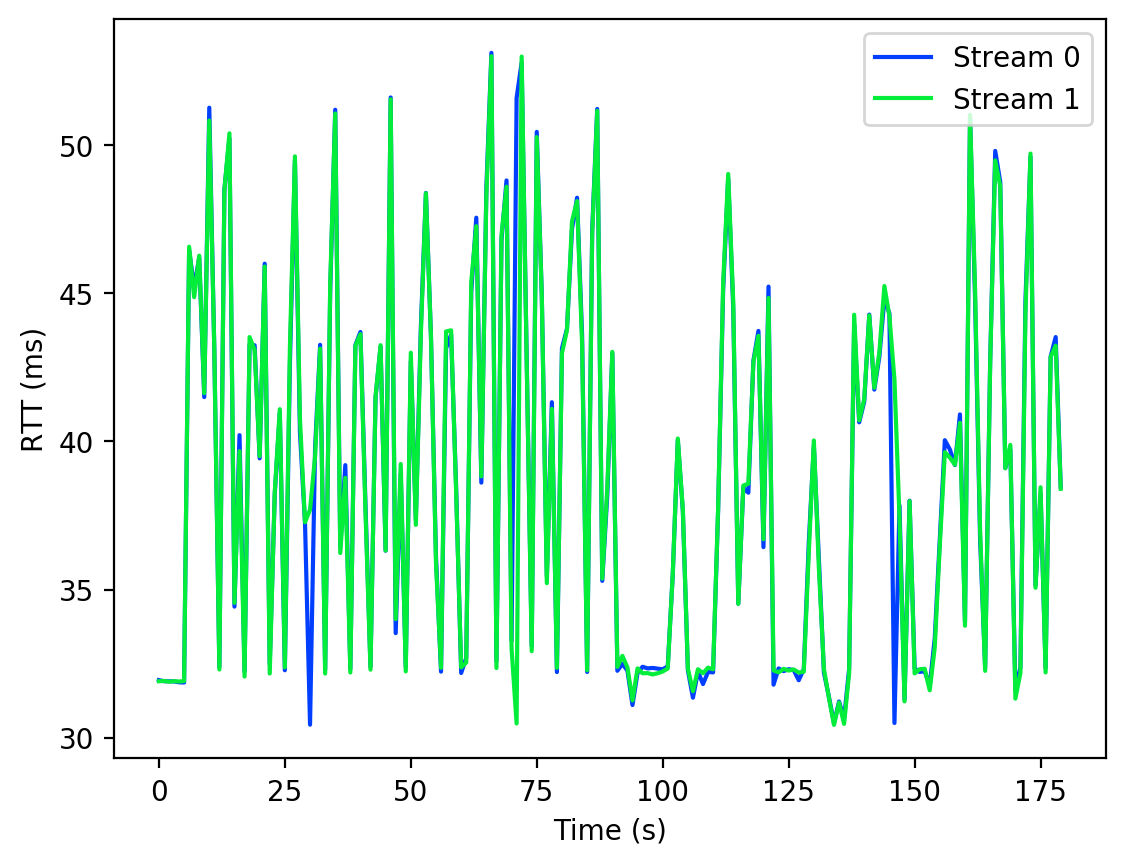

Recently I was running network tests at home. My setup include my desktop and laptop connected via a 1 Gbps link via a switch. I wanted to emulate a network which had an infinite buffer at it’s bottleneck. To achieve this I setup an iperf3 server on my laptop, and then configured NetEm with the following parameters on my desktop. Here DEV corresponds to my desktop’s network interface. Next I ran the following test for TCP Reno, Cubic, Vegas, and BBR. On the laptop side On the desktop side Note that I’m using two flows here. With one flow the algorithm’s would stop increasing before reaching a congested state. I’m not sure why this is, but nevertheless with two flows we get more interesting results. The data can be found here. Analyzing the DataTo analyze the data I used a Python script to plot the send CWND, throughput, and RTT for each of the tests. Here are the results. Note that no flows sustained losses, so they were not plotted. Also, for some reason the throughput measurements were significantly delayed on the client side, so the throughput measurements used are from server side. Reno

Cubic

Vegas

BBR

High RTTs are probably related to cwnd_gain. |